scRNA-seq+scATAC-seq integration¶

This tutorial shows loading, preprocessing, VIPCCA integration and visualization of scRNA-seq and scATAC-seq dataset.

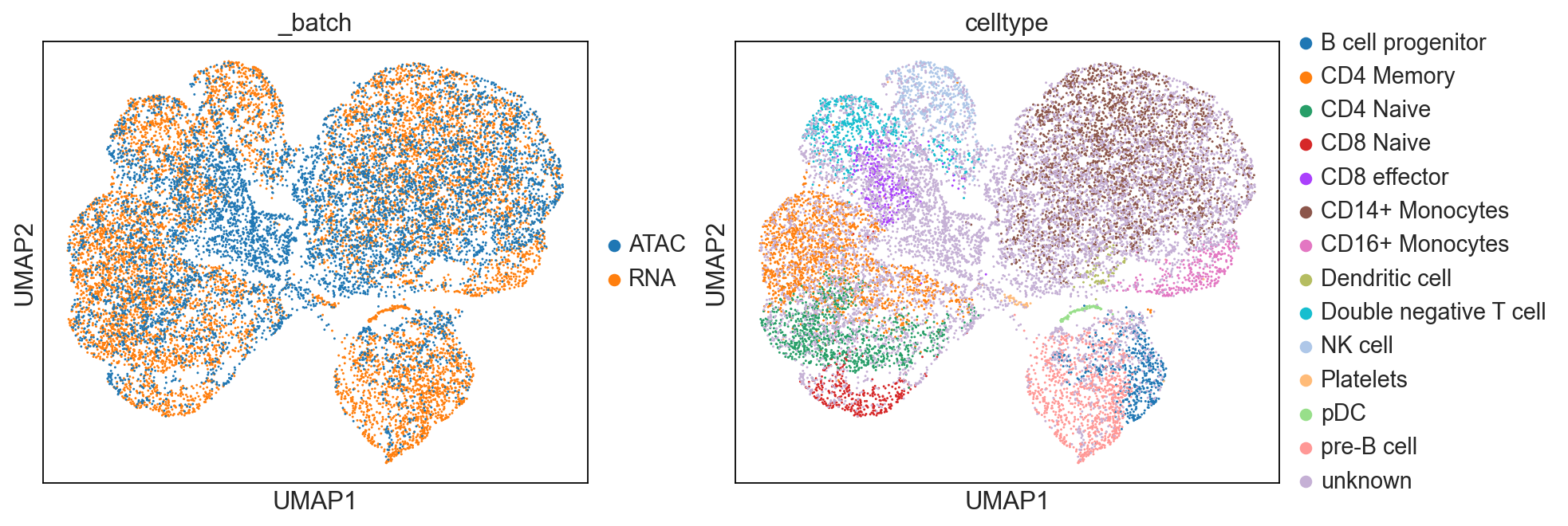

we obtained a scRNA-seq data consisting of gene expression measurements on 33,538 genes in 11,769 cells and a scATAC-seq data consisting of 89,796 open chromatin peaks on 8,728 nuclei, both were produced by 10X Genomics Chromium system and were on PBMCs.

Preprocessing with R¶

For the scRNA-seq data: Seurat have previously pre-processed and clustered a scRNA-seq dataset and annotate 13 celltype, and provide the object here. The 13 cell types include 460 B cell progenitor, 2,992 CD14+ Monocytes, 328 CD16+ Monocytes, 1,596 CD4 Memory, 1,047 CD4 Naïve, 383 CD8 effector, 337 CD8 Naïve, 74 Dendritic cell, 592 Double negative T cell, 544 NK cell, 68 pDC, 52 Plateletes, and 599 pre-B cell.

For the scATAC-seq data: First, we load in the provided peak matrix and collapse the peak matrix to a “gene activity matrix” using Signac. Then we filtered out cells that have with fewer than 5,000 total peak counts to focus on a final set of 7,866 cells for analysis. See the Signac website for tutorial and documentation for analysing scATAC-seq data.

After preprocessing, you can converting Seurat Object to AnnData via h5Seurat using R packages (SeuratDisk). In this case, the atac.h5ad file will be generated in the corresponding path.

library(SeuratDisk)

SaveH5Seurat(atac, filename = "atac.h5Seurat")

Convert("atac.h5Seurat", dest = "h5ad")

Besides, you can also directly download the processed scRNA-seq dataset and scATAC-seq dataset files in H5ad format.

Importing vipcca package¶

[1]:

import vipcca.model.vipcca as vip

import vipcca.tools.utils as tl

import vipcca.tools.plotting as pl

import vipcca.tools.transferLabel as tfl

import scanpy as sc

from sklearn.preprocessing import LabelEncoder

import matplotlib

matplotlib.use('TkAgg')

# Command for Jupyter notebooks only

%matplotlib inline

import warnings

warnings.filterwarnings('ignore')

from matplotlib.axes._axes import _log as matplotlib_axes_logger

matplotlib_axes_logger.setLevel('ERROR')

Loading data in python¶

[2]:

adata_rna = tl.read_sc_data("./rna.h5ad")

adata_atac = tl.read_sc_data("./geneact.h5ad")

Data preprocessing¶

Here, we filter and normalize each data separately and concatenate them into one AnnData object. For more details, please check the preprocessing API.

[3]:

adata_all= tl.preprocessing([adata_rna, adata_atac])

Trying to set attribute `.obs` of view, copying.

Trying to set attribute `.obs` of view, copying.

VIPCCA Integration¶

[4]:

# Command for Jupyter notebooks only

import os

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

# The four hyperparameters epochs, lambda_regulizer, batch_input_size, batch_input_size2 are user-defined parameters.

# Given a dataset with ~10K cells, we suggest to pick up

# a value larger than 600 for epochs,

# a value in the range of [2,10] for lambda_regulizer,

# a value at least less than the number of input features for batch_input_size,

# a value in the range of [8,16] for batch_input_size2.

handle = vip.VIPCCA(adata_all=adata_all,

res_path='./atac_result/',

mode='CVAE',

split_by="_batch",

epochs=20,

lambda_regulizer=2,

batch_input_size=64,

batch_input_size2=14,

)

adata_integrate=handle.fit_integrate()

WARNING:tensorflow:`period` argument is deprecated. Please use `save_freq` to specify the frequency in number of batches seen.

Model: "vae_mlp"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

encoder_input (InputLayer) [(None, 2000)] 0

__________________________________________________________________________________________________

batch_input1 (InputLayer) [(None, 64)] 0

__________________________________________________________________________________________________

encoder_mlp (Functional) [(None, 16), (None, 285088 encoder_input[0][0]

batch_input1[0][0]

__________________________________________________________________________________________________

batch_input2 (InputLayer) [(None, 14)] 0

__________________________________________________________________________________________________

decoder_mlp (Functional) (None, 2000) 542748 encoder_mlp[0][2]

batch_input2[0][0]

__________________________________________________________________________________________________

dense (Dense) (None, 64) 4160 batch_input1[0][0]

__________________________________________________________________________________________________

batch_normalization (BatchNorma (None, 64) 192 dense[0][0]

__________________________________________________________________________________________________

activation (Activation) (None, 64) 0 batch_normalization[0][0]

__________________________________________________________________________________________________

dense_1 (Dense) (None, 64) 4160 activation[0][0]

__________________________________________________________________________________________________

batch_normalization_1 (BatchNor (None, 64) 192 dense_1[0][0]

__________________________________________________________________________________________________

activation_1 (Activation) (None, 64) 0 batch_normalization_1[0][0]

__________________________________________________________________________________________________

concatenate (Concatenate) (None, 2064) 0 encoder_input[0][0]

activation_1[0][0]

__________________________________________________________________________________________________

dense_2 (Dense) (None, 128) 264320 concatenate[0][0]

__________________________________________________________________________________________________

batch_normalization_2 (BatchNor (None, 128) 384 dense_2[0][0]

__________________________________________________________________________________________________

activation_2 (Activation) (None, 128) 0 batch_normalization_2[0][0]

__________________________________________________________________________________________________

dropout (Dropout) (None, 128) 0 activation_2[0][0]

__________________________________________________________________________________________________

dense_3 (Dense) (None, 64) 8256 dropout[0][0]

__________________________________________________________________________________________________

batch_normalization_3 (BatchNor (None, 64) 192 dense_3[0][0]

__________________________________________________________________________________________________

activation_3 (Activation) (None, 64) 0 batch_normalization_3[0][0]

__________________________________________________________________________________________________

dropout_1 (Dropout) (None, 64) 0 activation_3[0][0]

__________________________________________________________________________________________________

dense_4 (Dense) (None, 32) 2080 dropout_1[0][0]

__________________________________________________________________________________________________

batch_normalization_4 (BatchNor (None, 32) 96 dense_4[0][0]

__________________________________________________________________________________________________

tf.math.subtract (TFOpLambda) (None, 2000) 0 encoder_input[0][0]

decoder_mlp[0][0]

__________________________________________________________________________________________________

activation_4 (Activation) (None, 32) 0 batch_normalization_4[0][0]

__________________________________________________________________________________________________

tf.math.reduce_mean (TFOpLambda (1, 1) 0 tf.math.subtract[0][0]

__________________________________________________________________________________________________

dropout_2 (Dropout) (None, 32) 0 activation_4[0][0]

__________________________________________________________________________________________________

tf.math.subtract_1 (TFOpLambda) (None, 2000) 0 tf.math.subtract[0][0]

tf.math.reduce_mean[0][0]

__________________________________________________________________________________________________

encoder_log_var (Dense) (None, 16) 528 dropout_2[0][0]

__________________________________________________________________________________________________

encoder_mean (Dense) (None, 16) 528 dropout_2[0][0]

__________________________________________________________________________________________________

tf.convert_to_tensor (TFOpLambd (None, 2000) 0 decoder_mlp[0][0]

__________________________________________________________________________________________________

tf.cast (TFOpLambda) (None, 2000) 0 encoder_input[0][0]

__________________________________________________________________________________________________

tf.math.square (TFOpLambda) (None, 2000) 0 tf.math.subtract_1[0][0]

__________________________________________________________________________________________________

tf.__operators__.add_1 (TFOpLam (None, 16) 0 encoder_log_var[0][0]

__________________________________________________________________________________________________

tf.math.square_1 (TFOpLambda) (None, 16) 0 encoder_mean[0][0]

__________________________________________________________________________________________________

tf.math.squared_difference (TFO (None, 2000) 0 tf.convert_to_tensor[0][0]

tf.cast[0][0]

__________________________________________________________________________________________________

tf.math.reduce_mean_1 (TFOpLamb () 0 tf.math.square[0][0]

__________________________________________________________________________________________________

tf.math.subtract_2 (TFOpLambda) (None, 16) 0 tf.__operators__.add_1[0][0]

tf.math.square_1[0][0]

__________________________________________________________________________________________________

tf.math.exp (TFOpLambda) (None, 16) 0 encoder_log_var[0][0]

__________________________________________________________________________________________________

tf.math.reduce_mean_2 (TFOpLamb (None,) 0 tf.math.squared_difference[0][0]

__________________________________________________________________________________________________

tf.math.truediv (TFOpLambda) () 0 tf.math.reduce_mean_1[0][0]

__________________________________________________________________________________________________

tf.math.log (TFOpLambda) () 0 tf.math.reduce_mean_1[0][0]

__________________________________________________________________________________________________

tf.math.subtract_3 (TFOpLambda) (None, 16) 0 tf.math.subtract_2[0][0]

tf.math.exp[0][0]

__________________________________________________________________________________________________

tf.math.multiply (TFOpLambda) (None,) 0 tf.math.reduce_mean_2[0][0]

tf.math.truediv[0][0]

__________________________________________________________________________________________________

tf.math.multiply_1 (TFOpLambda) () 0 tf.math.log[0][0]

__________________________________________________________________________________________________

tf.math.reduce_sum (TFOpLambda) (None,) 0 tf.math.subtract_3[0][0]

__________________________________________________________________________________________________

tf.__operators__.add (TFOpLambd (None,) 0 tf.math.multiply[0][0]

tf.math.multiply_1[0][0]

__________________________________________________________________________________________________

tf.math.multiply_2 (TFOpLambda) (None,) 0 tf.math.reduce_sum[0][0]

__________________________________________________________________________________________________

tf.math.multiply_3 (TFOpLambda) (None,) 0 tf.__operators__.add[0][0]

__________________________________________________________________________________________________

tf.math.multiply_4 (TFOpLambda) (None,) 0 tf.math.multiply_2[0][0]

__________________________________________________________________________________________________

tf.__operators__.add_2 (TFOpLam (None,) 0 tf.math.multiply_3[0][0]

tf.math.multiply_4[0][0]

__________________________________________________________________________________________________

tf.math.reduce_mean_3 (TFOpLamb () 0 tf.__operators__.add_2[0][0]

__________________________________________________________________________________________________

add_loss (AddLoss) () 0 tf.math.reduce_mean_3[0][0]

==================================================================================================

Total params: 827,836

Trainable params: 826,080

Non-trainable params: 1,756

__________________________________________________________________________________________________

Epoch 1/20

68/68 [==============================] - 5s 24ms/step - loss: 5699.5386

Epoch 2/20

68/68 [==============================] - 1s 22ms/step - loss: 5387.9050

Epoch 3/20

68/68 [==============================] - 2s 28ms/step - loss: 5354.2480

Epoch 4/20

68/68 [==============================] - 2s 23ms/step - loss: 5326.5728

Epoch 5/20

68/68 [==============================] - 2s 25ms/step - loss: 5316.6463

Epoch 6/20

68/68 [==============================] - 1s 22ms/step - loss: 5305.0356

Epoch 7/20

68/68 [==============================] - 1s 19ms/step - loss: 5304.1235

Epoch 8/20

68/68 [==============================] - 1s 19ms/step - loss: 5302.7018

Epoch 9/20

68/68 [==============================] - 1s 19ms/step - loss: 5290.4808

Epoch 10/20

68/68 [==============================] - 1s 19ms/step - loss: 5281.9169

Epoch 11/20

68/68 [==============================] - 1s 19ms/step - loss: 5289.3734

Epoch 12/20

68/68 [==============================] - 1s 20ms/step - loss: 5285.7788

Epoch 13/20

68/68 [==============================] - 1s 19ms/step - loss: 5285.3835

Epoch 14/20

68/68 [==============================] - 1s 18ms/step - loss: 5288.9461

Epoch 15/20

68/68 [==============================] - 1s 19ms/step - loss: 5277.2054

Epoch 16/20

68/68 [==============================] - 1s 20ms/step - loss: 5281.0600

Epoch 17/20

68/68 [==============================] - 1s 19ms/step - loss: 5281.3589

Epoch 18/20

68/68 [==============================] - 1s 20ms/step - loss: 5275.7597

Epoch 19/20

68/68 [==============================] - 1s 19ms/step - loss: 5276.1251

Epoch 20/20

68/68 [==============================] - 1s 20ms/step - loss: 5279.9665

WARNING:tensorflow:Found duplicated `Variable`s in Model's `weights`. This is usually caused by `Variable`s being shared by Layers in the Model. These `Variable`s will be treated as separate `Variable`s when the Model is restored. To avoid this, please save with `save_format="tf"`.

... storing '_batch' as categorical

... storing 'celltype' as categorical

... storing 'tech' as categorical

Cell type prediction¶

[5]:

atac=tfl.findNeighbors(adata_integrate)

store celltype in adata_integrate.obs[‘celltype’]

[6]:

adata_atac.obs['celltype'] = atac['celltype']

adata = adata_rna.concatenate(adata_atac)

adata_integrate.obs['celltype'] = adata.obs['celltype']

Loading result¶

Loading result from h5ad file: The output.h5ad file of the trained result can be downloaded here

[7]:

adata_integrate = tl.read_sc_data('./atac_result/output_save.h5ad')

UMAP Visualization¶

[8]:

sc.pp.neighbors(adata_integrate, use_rep='X_vipcca')

sc.tl.umap(adata_integrate)

sc.set_figure_params(figsize=[5.5, 4.5])

sc.pl.umap(adata_integrate, color=['_batch', 'celltype'])